Summary

GPUs are built for highly parallel workloads. But many modern jobs—especially AI inference tasks—don’t need the full compute power of a high-end GPU. When a small workload locks up the resources of an entire GPU, the result is underutilization, higher costs, and wasted resources.

Pepperdata leverages NVIDIA’s Multi-Instance GPU (MIG) technology to automate GPU slicing for maximized utilization and significantly reduced costs in both cloud and on-premises environments.

The Challenge: GPU Underutilization in AI and Data Workloads

AI inference, machine learning, and other GPU-intensive workloads can vary widely in their resource requirements. Without proper resource sizing, the workloads will request much more than what is needed just to make sure that the workload runs without resource issues.

We have seen this happening in almost all organizations in any vertical. So far it was about CPU and memory and to some extent disk space but with GPU, the problem is much more because of the an order of magnitude difference in the costs.

The Solution: Automated GPU Slicing with Pepperdata Resource Optimization

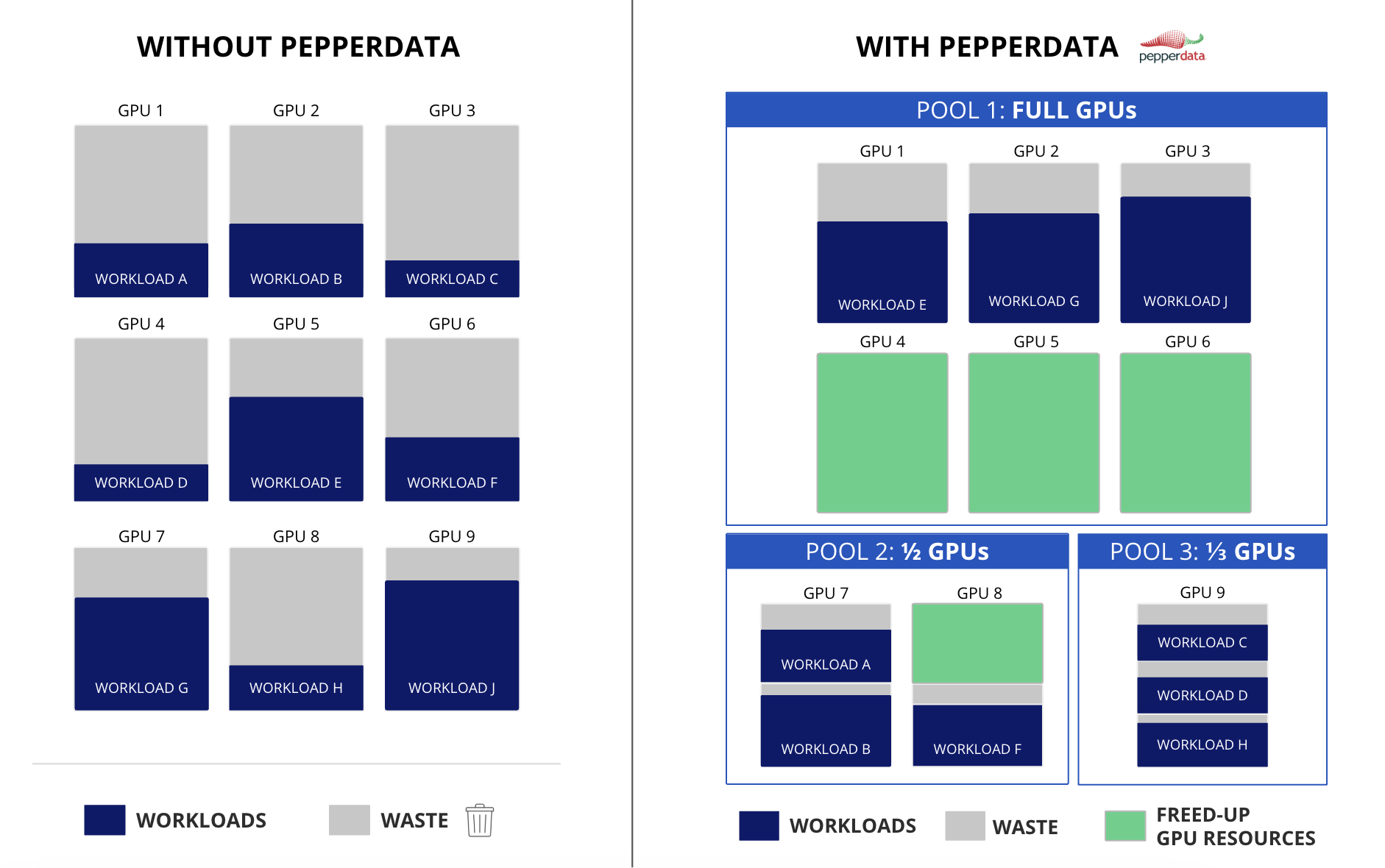

Pepperdata Resource Optimization solves the challenge of GPU optimization by automating GPU slicing at scale. Leveraging NVIDIA’s MIG feature, Pepperdata automatically partitions single GPUs into secure, independent slices and organizes them into three dynamic pools:

- Full GPU – Dedicated for demanding workloads requiring an entire GPU.

- ½ GPU (2 MIG slices per GPU) – For medium workloads needing only half a GPU.

- ⅓ GPU (3 MIG slices per GPU) – Ideal for lighter workloads that fit within a third of a GPU.

Additionally, Pepperdata monitors the workloads and rightsizes its GPU requests based on its historic data. This makes sure that each workload is not allocating more than what they requested without users needing to do anything.

The rightsizing and management of the pools is done automatically, so developers or platform owners don't need to worry about using the GPUs optimally.

Before Pepperdata, GPUs often operate at a fraction of their capacity, generating unnecessary cloud charges or wasting on-premises resources.

With Pepperdata installed, GPUs are automatically sliced and intelligently packed at maximum capacity, boosting efficiency and minimizing waste.

How Automated GPU Resource Optimization Improves Efficiency and Performance

- Rightsizing the workloads – Pepperdata increases GPU compute and memory utilization, creating more effective usage in the cloud or on-premises.

- Automatically creating and managing the GPU slice – More available GPUs and increased utilization mean more workloads run to completion with the same resources. Smaller workloads also finish faster, without waiting for full GPUs to free up.

- Freeing platform owners and developers to focus on their core work – Optimization teams can focus on developing and deploying new AI applications rather than common manual optimization tasks to save a small percentage of spend.

Eliminate the Guesswork of GPU Resource Allocation with Pepperdata.ai

Pepperdata’s GPU Resource Optimization removes the manual effort from GPU slicing, intelligently rightsizing workload placement to dramatically minimize resource waste. Organizations can now get the most out of their GPUs—without the complexity of manual tuning.

The Bottom Line

Pepperdata turns GPU waste into GPU efficiency. By automating slicing and workload placement, organizations maximize performance, minimize costs, and unlock the full potential of their GPU investments.

Ready to get more out of every GPU? Pepperdata’s automated GPU slicing helps you maximize utilization, run more workloads with fewer resources, and slash costs — all without manual tuning. Request a demo to see Pepperdata GPU Optimization in action—or join the waitlist to get access to Pepperdata in your environment.